Description

CommemorativeCoin-Assistant is a repository of some assistants helping subscribe commemorative coins through banks’ websites. Those script can help speed up the subscribing process, especially can automatically fill up some necessary information, including name, phone number and ID card number.

Files

├── BankofChina.js: script for Bank of China (AKA: BOC)

├── ChinaConstructionBank.js: script for China Construction Bank (AKA: CCB)

└── README.md: specialization

Environment

- Google Chrome or Firefox

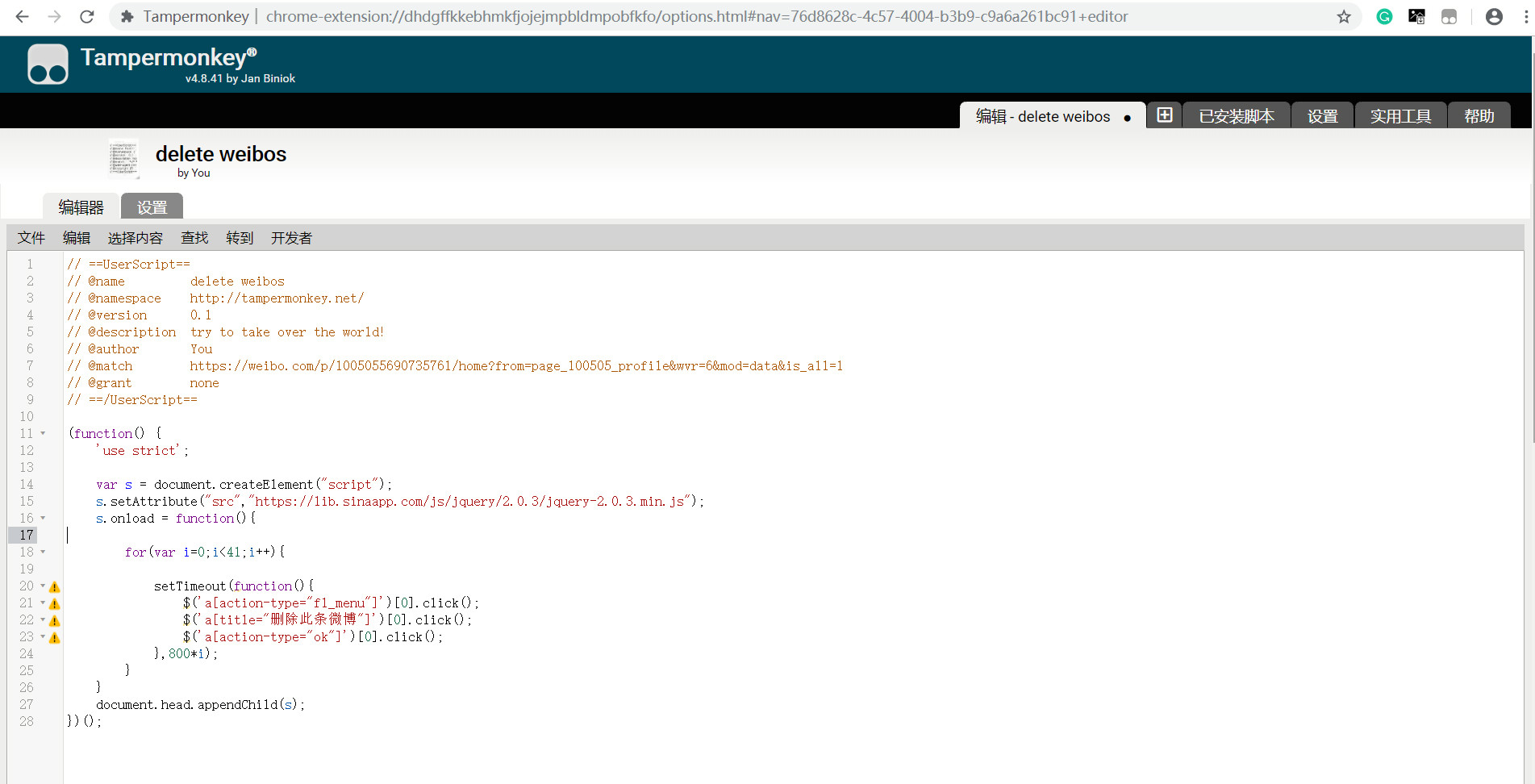

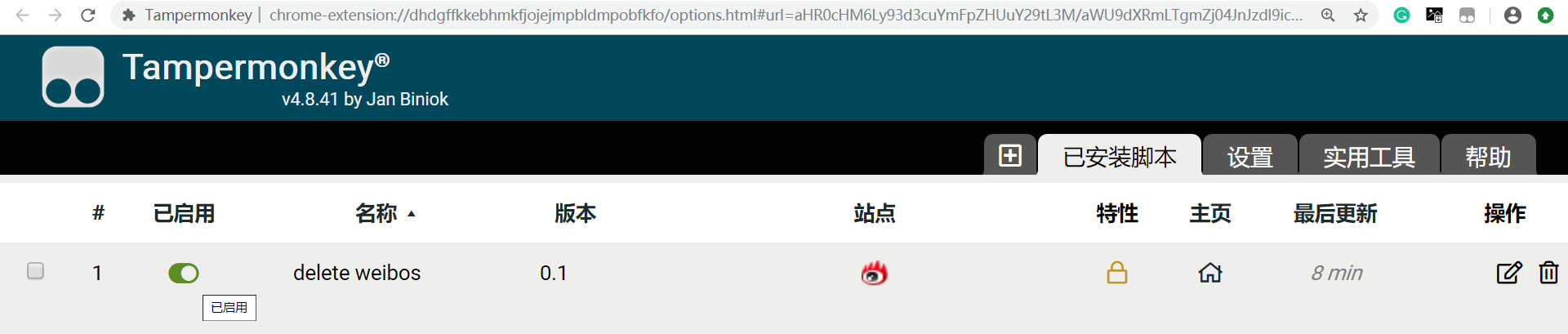

- TamperMonkey (for running on CCB’s website)

HOW-TO

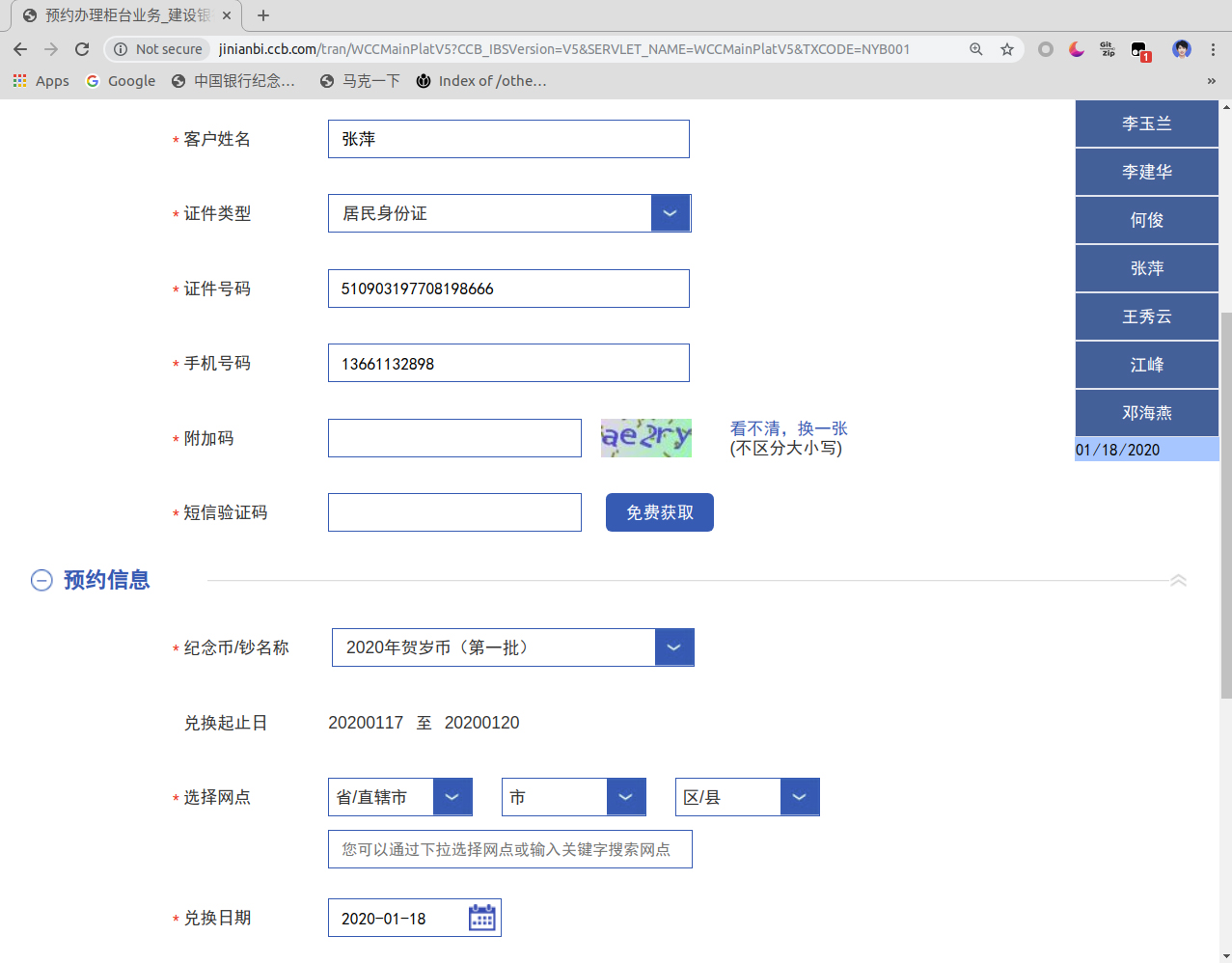

CCB

Edit

ChinaConstructionBank.js, replace example info to subscribers’ information and modify the exchange date.Create a script in TamperMonkey and paste the edited copy to it.

Browse the CCB’s subscription website and the script will run .

You can click the name on the right and his/her credential number and mobile number will be inserted to the corresponding widgets automatically.

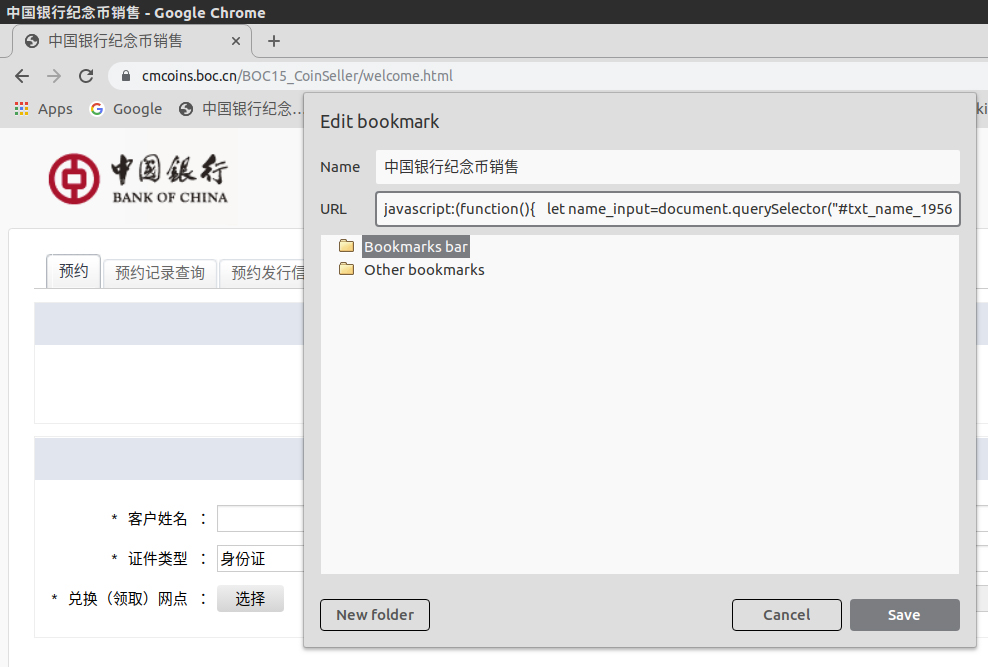

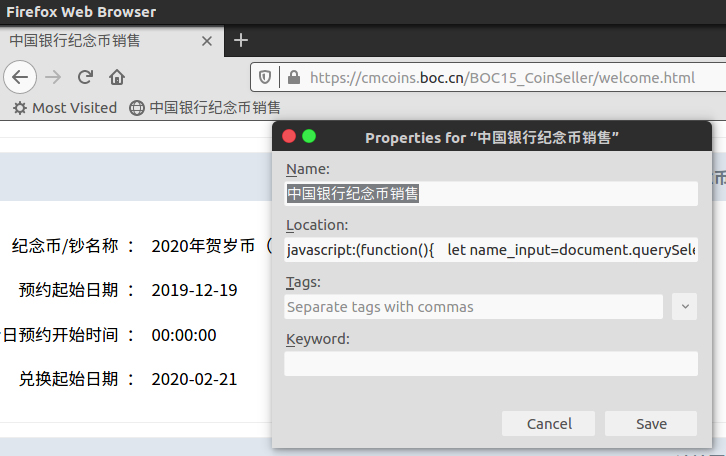

BOC

Edit

BankofChina.js, replace example info to subscribers’ information, and then arrange all the codes in one line only.Open Chrome or Firefox, create a bookmark(page) on the bookmarks bar, place the one-lined script to

URL(Chrome) orLocation(Firefox) and save.

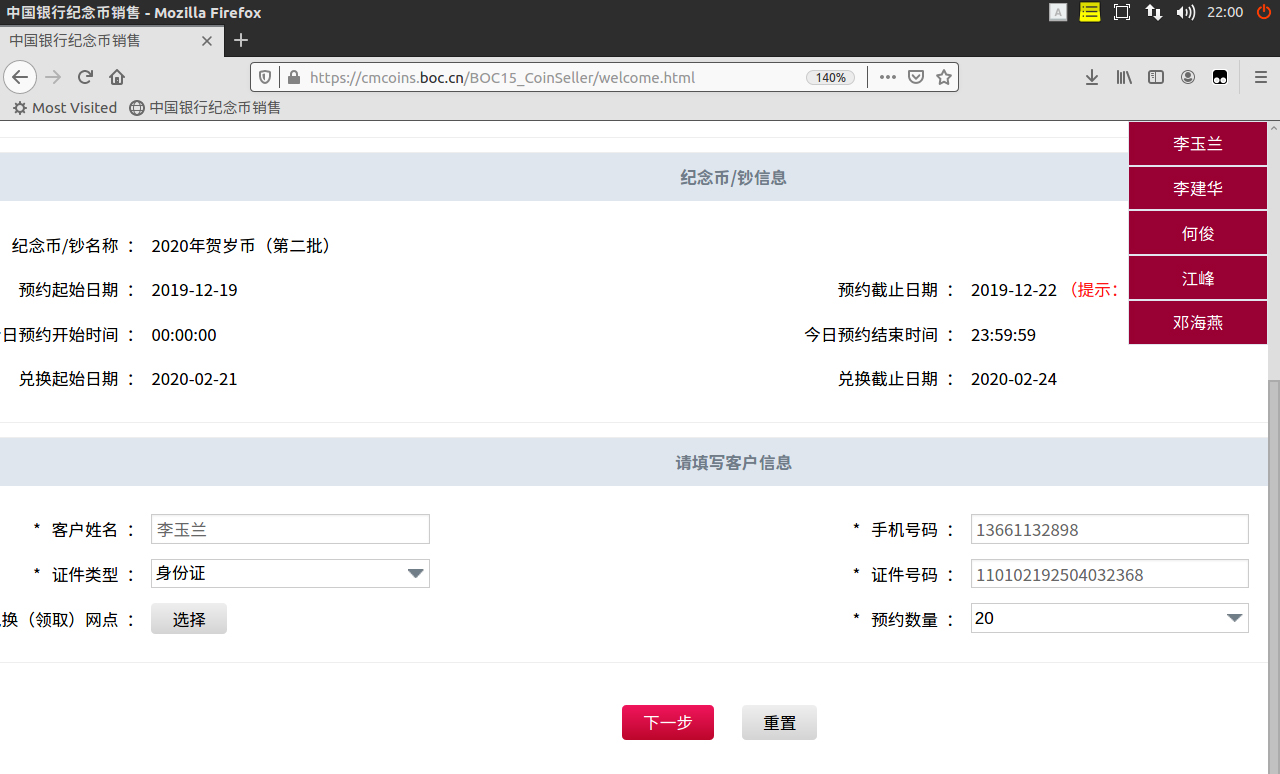

Browse the BOC’s subscription website and click the bookmark button on the bookmarks bar. And …

You can click the name on the right and his/her credential number and mobile number will be inserted to the corresponding widgets automatically.

Attention: According to the risk control rules, you’d better not to use ONE phone number for subscriptions more than 5 times.